ChatGPT is great, but right now, it’s limited to just text — text in, text out. GPT-4 was supposed to expand on this by adding image processing to allow it to generate text based on images.

OpenAI has yet to release this feature, however, which is where MiniGPT-4 comes in. This open source project gives us a preview of what the image processing in GPT-4 might be like — and it’s pretty neat.

What is MiniGPT-4?

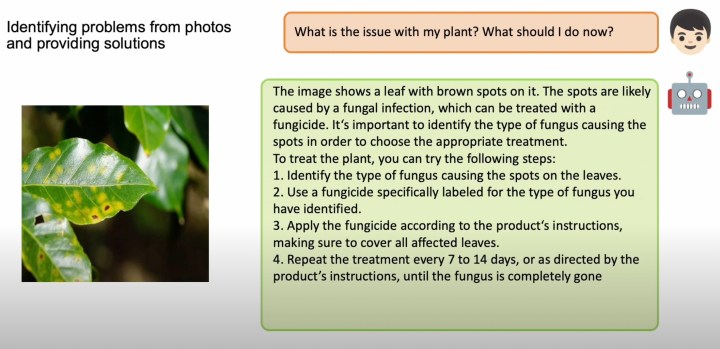

MiniGPT-4 is an open source project that was posted on GitHub to demonstrate vision-language capabilities in an AI system. Some examples of what it can do include generating descriptions of images, writing stories based on images, or even creating websites just from drawings.

Despite what the name implies, MiniGPT-4 is not officially connected to OpenAI or GPT-4. It was created by a group of Ph.D. students based in Saudi Arabia at the King Abdullah University of Science and Technology. It’s also based on a different large language model (LLM) called Vicuna, which itself was built on the open-source Large Language Model Meta AI (LLaMA). It’s not quite as powerful as ChatGPT, but as graded by GPT-4 itself, Vicuna gets within 90%.

How to use MiniGPT-4

MiniGPT-4 is just a demo and is still in its first version. For now, it can be accessed for free at the group’s official website. To use it, just drag an image in or click “Drop Image Here.” Once it’s uploaded, type your prompt into the search box.

What kinds of things should you try out? Well, asking MiniGPT-4 to describe an image is simple enough. But maybe you need some copy for an Instagram post for your company. Or maybe you want to knoe the ingredients needed for an interesting dish, and even a recipe for how to cook it. MiniGPT-4 can handle these tasks surprisingly well.

The coding aspects are a bit more rough around the edges. Turning a simple napkin drawing into a functioning website was a trick shown off by OpenAI when GPT-4 was first announced. But MiniGPT-4 doesn’t seem to be able to handle that quite as well just yet. ChatGPT will provide more accurate code — in fact, running whatever the MiniGPT-4 code is through ChatGPT or GPT-4 will net you better results.

One thing to note is that MiniGPT-4 does use your local system’s GPU. So, unless you have a fairly powerful discrete GPU, you may find the experience fairly slow. For context, I tried it out on a M2 Max MacBook Pro, and it took around 30 seconds to generate text based on an image I uploaded.

Limitations of MiniGPT-4

The speed of MiniGPT-4 is certainly a limitation. If you’re trying to access this without some decent graphics, it’s too slow to feel responsive. If you’re used to the speed of cloud-based ChatGPT or even Bing Image Creator, MiniGPT-4 is going to feel painfully slow.

Beyond that, MiniGPT-4 has all the same limitations that ChatGPT or Google Bard or any other AI chatbot in that it can “hallucinate” or make up information.

Editors’ Recommendations