ChatGPT rose to viral fame over the past six months, but it didn’t come out of nowhere. According to a blog post published by Microsoft on Monday, OpenAI, the company behind ChatGPT, reached out to Microsoft to build AI infrastructure on thousands of Nvidia GPUs more than five years ago.

OpenAI and Microsoft’s partnership has caught a lot of limelight recently, especially after Microsoft made a $10 billion investment in the research group that’s behind tools like ChatGPT and DALL-E 2. However, the partnership started long ago, according to Microsoft. Since then, Bloomberg reports that Microsoft has spent “several hundred million dollars” in developing the infrastructure to support ChatGPT and projects like Bing Chat.

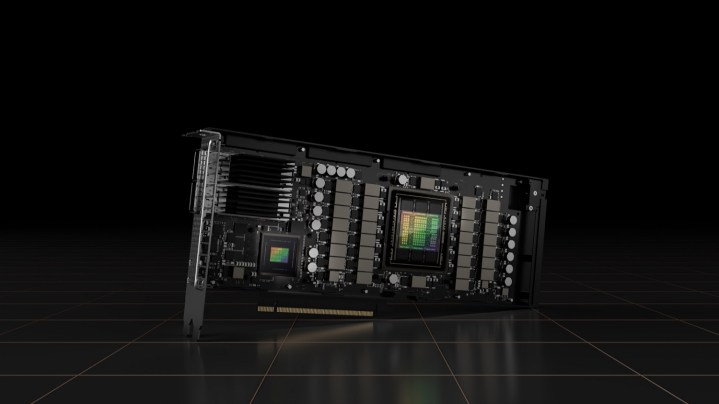

Much of that money went to Nvidia, which is now in the forefront of computing hardware required to train AI models. Instead of gaming GPUs like you’d find on a list of the best graphics cards, Microsoft went after Nvidia’s enterprise-grade GPUs like the A100 and H100.

It’s not just as simple as getting graphics cards together and training a language model, though. As Nidhi Chappell, Microsoft head of product for Azure, explains: “This is not something that you just buy a whole bunch of GPUs, hook them together, and they’ll start working together. There is a lot of system-level optimization to get the best performance, and that comes with a lot of experience over many generations.”

With the infrastructure in place, Microsoft is now opening up its hardware to others. The company announced on Monday in a separate blog post that it would offer Nvidia H100 systems “on-demand in sizes ranging from eight to thousands of Nvidia H100 GPUs,” delivered through Microsoft’s Azure network.

The popularity of ChatGPT has skyrocketed Nvidia, which has invested in AI through hardware and software for several years. AMD, Nvidia’s main competitor in gaming graphics cards, has been attempting to make headway into the space with accelerators like the Instinct MI300.

According to Greg Brockman, president and co-founder of OpenAI, training ChatGPT wouldn’t have been possible without the horsepower provided by Microsoft: “Co-designing supercomputers with Azure has been crucial for scaling our demanding AI training needs, making our research and alignment work on systems like ChatGPT possible.”

Nvidia is expected to reveal more about future AI products during the GPU Technology Conference (GTC). with the keynote presentation kicks things off on March 21. Microsoft is expanding its AI road map later this week, with a presentation focused around the future of AI in the workplace scheduled for March 16.

Editors’ Recommendations